4 min read

Deploy Operations-Ready High Performance Computing (HPC) applications using MontyCloud DAY2

Samrat Priyadarshi

:

Dec 2, 2020 11:43:00 AM

Samrat Priyadarshi

:

Dec 2, 2020 11:43:00 AM

Running High Performance Computing (HPC) applications on-premise is complicated. The infrastructure can be complex to build and maintain, often runs over budget and does not scale well. With AWS, it is easier for customers to build scalable and cost efficient HPC infrastructure in the cloud. However, deep cloud infrastructure expertise is required to build, deploy, and operate HPC environments on AWS. In this blog, Samrat (Sam) Priyadarshi a Senior Cloud Solutions Engineer at MontyCloud, explains how researchers and students at St. Louis University use MontyCloud DAY2 to provision and manage HPC clusters using AWS Slurm and AWS Batch. Customers like St. Louis University can save up to 70% in their total cost of cloud operations with DAY2.

– Sabrinath Rao

Researchers and students needing HPC environments often find themselves having to deal with infrastructure, operational and security overheads that extends well beyond their job requirements and skill sets. Often customers have under-utilized and out-of-warranty clusters that don’t provide optimal performance. Customers desire to deploy smaller clusters for research groups and individual students while keeping the management complexity to a minimum. Also, customers find it hard to find the right talent to maintain these environments while keeping costs under check.

For example, one of our customers Saint Louis University (SLU) uses the DAY2 Slurm Blueprint to provision HPC clusters on-demand. Their Cloud team has increased productivity and is helping drive innovation faster by enabling researchers with self-service provisioning capabilities, along with easy to use operational automation of the HPC infrastructure. SLU team has saved several weeks of manual effort and continues to save both time and cost as researchers consume more capacity for their innovation.

With DAY2, SLU researchers are choosing between two options, HPC using Slurm and HPC using AWS Batch, to instantly provision the clusters along with the ready to use self-service tasks.

In this blog I am going to share how MontyCloud DAY2 enables customers to deploy Operations-Ready HPC applications that save time, costs and accelerate research, all in just a few clicks. With DAY2 researchers can offload the burden of well-architected infrastructure buildout, deployment, and operations and instead focus on their innovation. With this solution, customers can deploy right sized HPC environments on-demand, and instantly start submitting jobs without any of the heavy lifting involved with current solutions.

With DAY2 Customers can save several weeks of effort and 40-70% in cloud costs

Well-architected HPC blueprints in DAY2 are ready to use templates that help deploy cost efficient, compliant, and secure HPC applications when used in conjunction with the DAY2 platform. By using well architected blueprints that are designed for compliance and cost savings, and accelerating deployment times with few clicks, customers can save several weeks of effort, and run cost optimized HPC environments.

Following are the benefits of using the blueprints in DAY2:

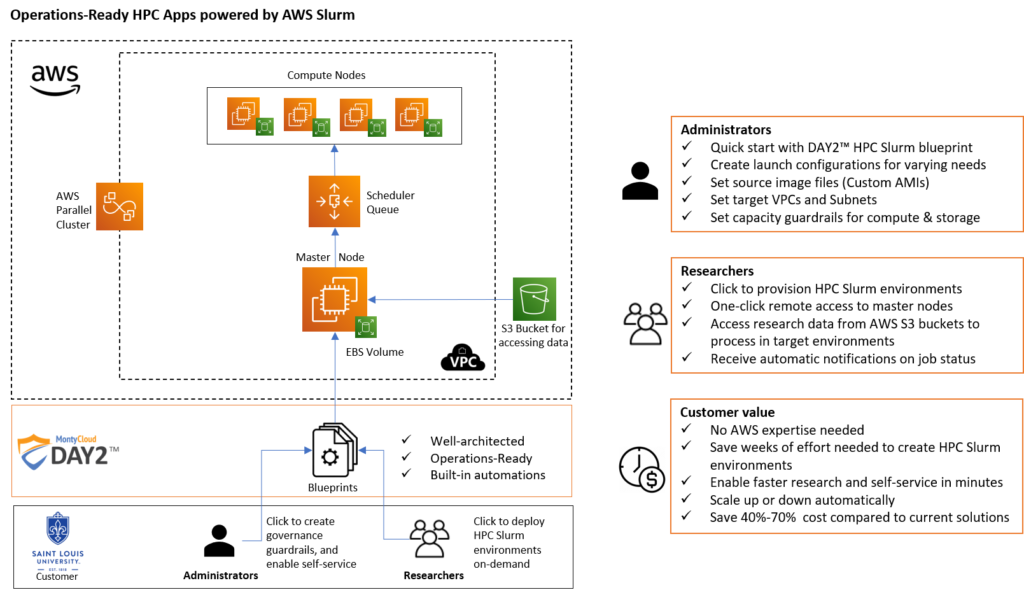

- With readily available HPC blueprints, administrators can save several weeks of effort and enable their teams to create environments on-demand. Administrators can also create deployment guardrails to allow faculty and lead researcher admins to operate within a ceiling on spend and growth. For example, it is easy to create custom launch configurations for different users, and control elastic properties such as min/max nodes, dimensions of cluster nodes (instance type / CPU-RAM choices), source AMIs, VPCs, Subnets, etc.acces Often times the right-sized configurations save 40% to 70% in AWS costs.

- Researchers can simply click to deploy HPC environments without needing access to the AWS console, and within the configuration guardrails set by administrators. No AWS expertise is needed.

- Researchers can also make optional decisions on access to AWS S3 for secure data transfer between the master node and user environments, change min/max nodes, and use spot instances for more frugal architecture decisions, as allowed within their guardrails.

- In one-click, researchers can connect to the Slurm cluster using DAY2 Remote Console feature, without requiring a VPN/Bastion Host/SSH client or exposing the cluster’s management ports & interfaces unnecessarily to the Internet or other networks.

- DAY2 automatically reduces the number of instances when no jobs are present in queue. This means that researchers will not need to manually manage the power status of the cluster’s instances, thus maximizing the cost savings during the life of the cluster.

- Administrators can also enable self-service tasks such as snapshots and backup operations for data protection from a list of ready-to use tasks catalog. This saves communication overheads and delays in operations.

The DAY2 differentiation

DAY2 is a No-Code, Autonomous CloudOps platform. Customers can accomplish the desired outcome without writing any additional code and without requiring expertise in AWS or HPC technologies. Unlike off-the-shelf Infrastructure as Code templates, customers do not need to build their own images. Unlike existing solutions, researchers have less decisions to make about provisioning a cluster and focus more on their research than managing the underlying infrastructure.

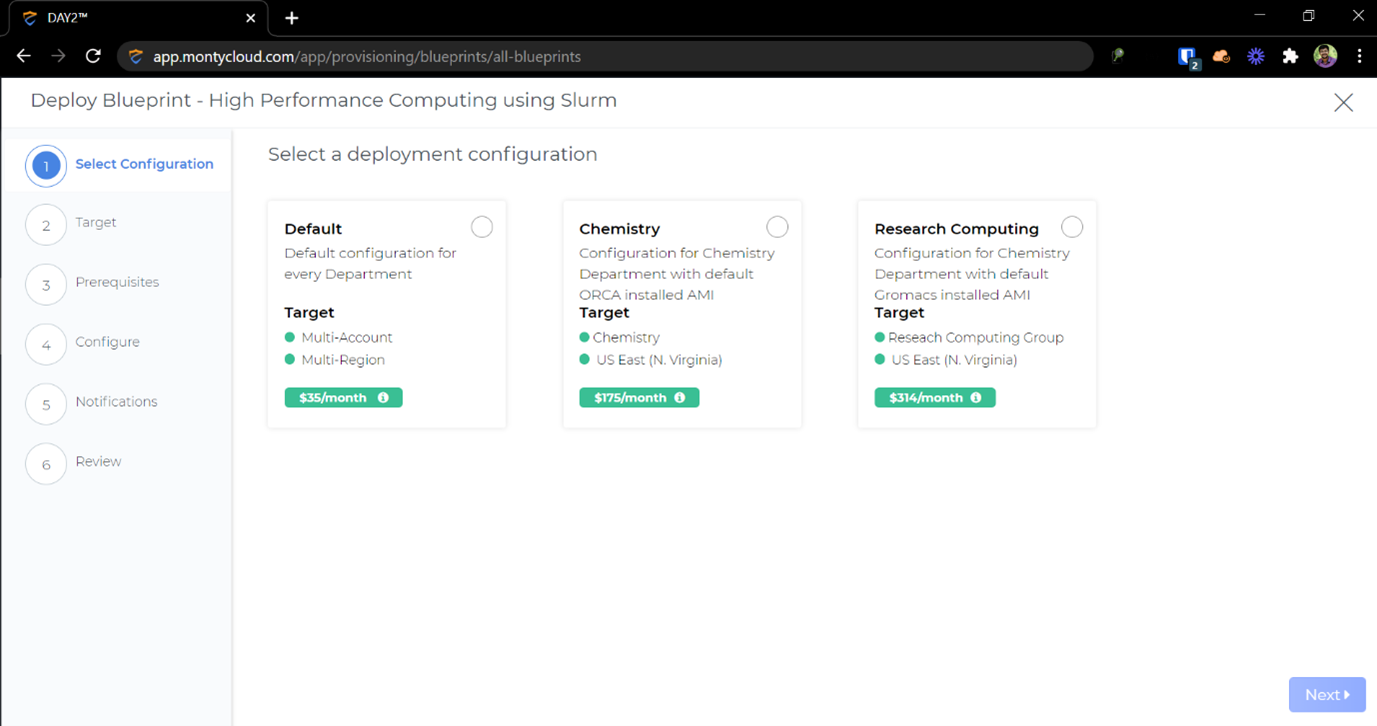

Figure 1: Researchers can use readily available, well-architected HPC blueprints in various configurations available to them.

DAY2 HPC Slurm blueprint

SLU provisions High Performance Computing clusters using DAY2 HPC Slurm Blueprint for multiple purposes. SLU’s Research Computing Group uses this Blueprint to run Molecular Dynamics Simulations using Gromacs which is a molecular dynamics package designed for simulations of proteins, lipids and nucleic acids. SLU’s Chemistry department also uses this Blueprint to run their ORCA jobs for quantum chemistry. Researchers use DAY2 to provision the HPC Clusters on demand whenever they need it, submit their jobs, get the output back and shut down the clusters once the job is done, all in just few clicks and with no AWS expertise.

Figure 2: Operations-Ready HPC environment with AWS Slurm

DAY2 HPC using Batch blueprint

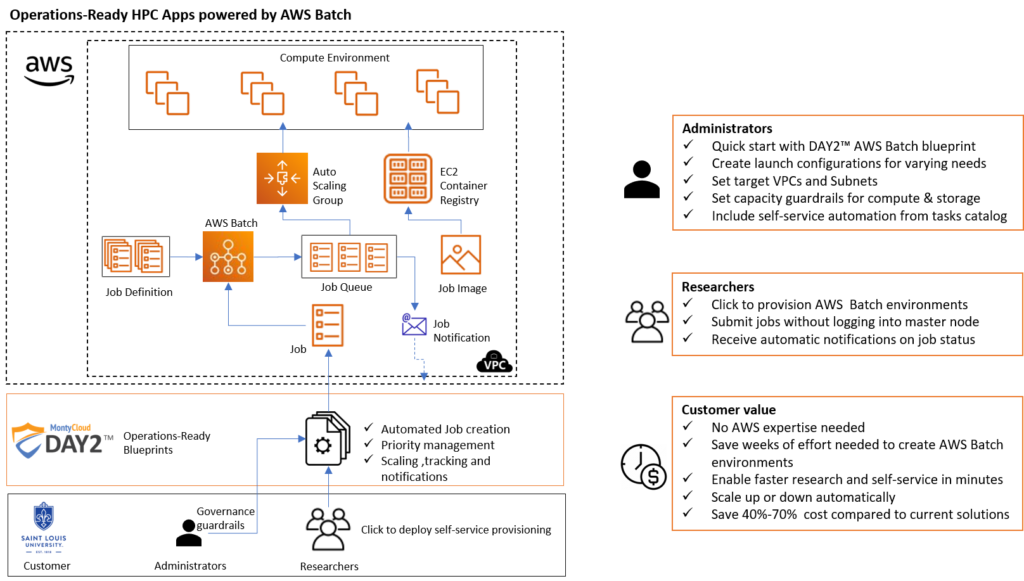

MontyCloud’s DAY2 HPC Batch Blueprint manages all the infrastructure for you, avoiding the complexities of provisioning, managing, monitoring, and scaling your batch computing jobs. By using the HPC Batch Blueprint to move compute-intensive workloads to AWS, customers have increased speed, scalability, and cost-savings. The Blueprint automates the resourcing and scheduling of the jobs to save costs and provide the job results faster to accelerate decision-making.

Figure 3: Operations-Ready HPC environment with AWS Batch

With DAY2 Operations Ready HPC Blueprints, customers can save several weeks in effort and 40-70% in total cost of cloud operations, without requiring the sophisticated AWS skills. It is now easy to provision HPC clusters on-demand, with governance guardrails, security standards and highly automated self-service operations. Administrators can easily enable self-service provisioning, and end users such as researchers can spin up managed environments that they can control easily and accelerate innovation.

Like what you read and want to get started? You can click here and request a demo.